If you think AI will magically make your biased hiring decisions disappear, think again.

In 2014, Amazon wanted to solve the problem of ranking job candidates once and for all.

Their solution was a new tool—powered by machine learning and artificial intelligence (AI)—that would take all of the guesswork and human biases out of finding the best candidate for a given position. Feed the tool a hundred resumes, and it would automatically score each of them on a scale of one to five stars.

On paper, the tech was every recruiter's dream. In practice, though, it couldn't be more nightmarish.

The tool scored candidates by comparing words and phrases in submitted resumes to those found in hundreds of past resumes, as well as the resumes of current Amazon employees, to find similarities. The more similarities, the better the score.

But because IT roles at Amazon and elsewhere were (and still are) disproportionately held by men, the tool did something unexpected: It taught itself to penalize female applicants for these roles. If a candidate went to an all women's college, she was downgraded. Same thing if she mentioned she was in a "women's chess club," for example.

Yikes.

By 2017, the tool was abandoned. In true mad scientist fashion, the thing that Amazon created to eliminate bias in their hiring decisions ended up making it worse.

And if it can happen to Amazon, it can happen to you too.

AI discrimination is no longer just an Amazon problem

Today, you don't need to have the resources of Amazon to leverage AI and machine learning in your recruiting processes; more and more commercially available recruiting software systems are adding this tech to aid in obtuse and time-intensive talent decisions.

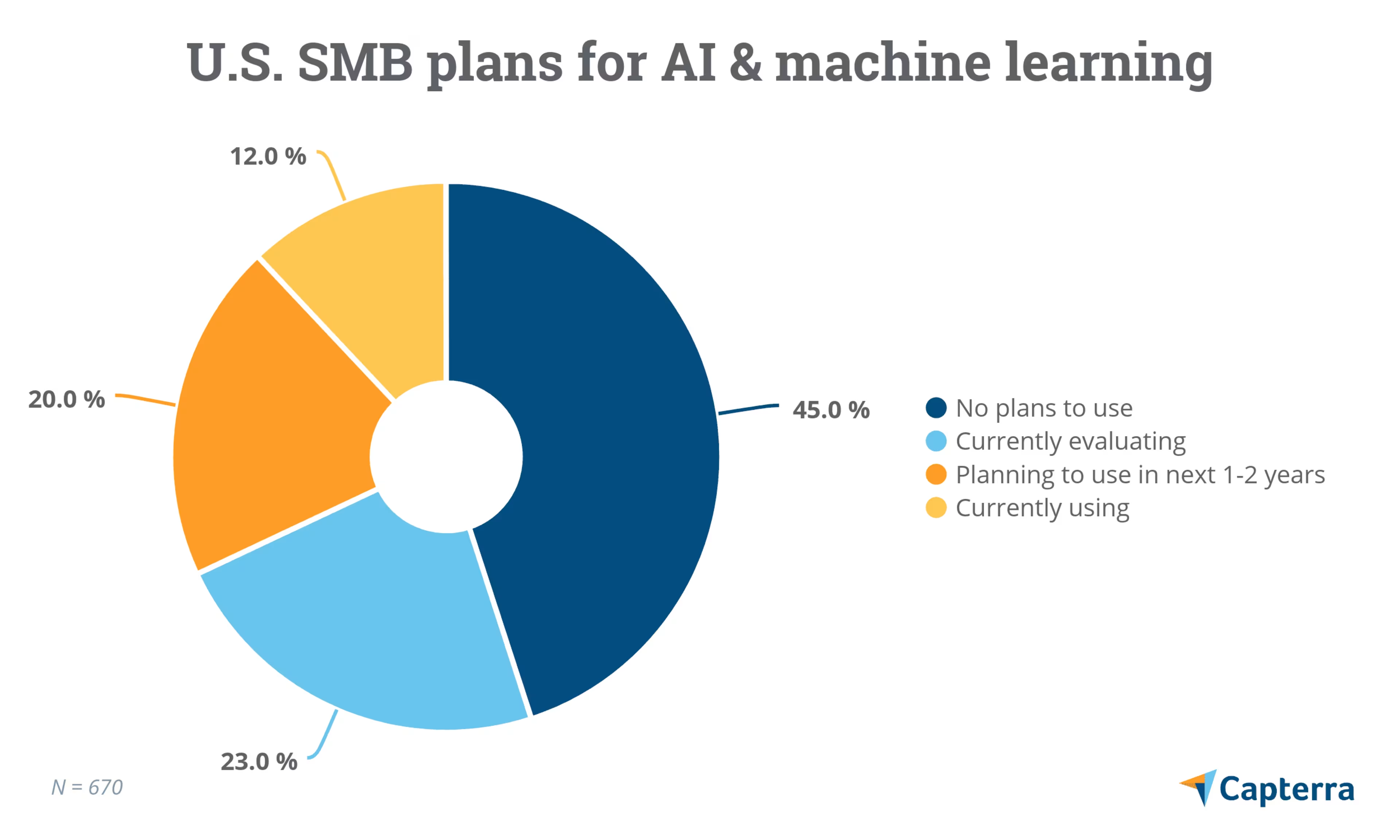

And more and more companies are taking notice. According to Capterra's 2018 Top Technology Trends survey, at least 32% of small and midsize businesses (SMBs) in the United States will be leveraging AI and machine learning by 2020. A 2018 Korn Ferry survey adds that 63% of recruiters believe that AI has already changed the way recruiting is done in their organization.

That's exciting news, and if you're reading this article, I'm going to guess that you've had your eye on one of these AI-powered platforms for your own business.

But as the Amazon example shows, simply adding AI and machine learning to your recruiting processes doesn't just make biases and discrimination disappear. In fact, when implemented carelessly, it can take the undesired tendencies embedded in your recruiting data and blow them up to disastrous proportions.

That's why you need to take steps to mitigate AI discrimination now before you adopt these recruiting tools.

Below, we'll explain how AI discrimination can happen to teams with the best intentions and offer some tips for how you can prepare to ensure AI discrimination doesn't happen in your organization.

AI discrimination comes in 2 forms

Direct AI discrimination

According to Gartner's “Control Bias and Eliminate Blind Spots in Machine Learning and Artificial Intelligence," direct AI discrimination occurs when an algorithm makes decisions based on sensitive or protected attributes like gender, race, or sexual orientation (full research available to Gartner clients).

Using the Amazon example, imagine if they had explicitly told their AI tool whether each historical resume they were feeding it was from a man or a woman. The AI then learns that men are more likely to be hired into IT roles and decides male applicants are preferred.

This is an example of direct AI discrimination. Not only is it easy to detect, but it's also easy to prevent: Don't give your AI this protected information or factor these attributes in your hiring decisions!

A brief reminder of protected attributes

According to the EEOC, it's illegal to make hiring decisions based on the following:

Age

Disability

Race

Color

Sex (including pregnancy, sexual orientation, and gender identity)

Religion

National origin

Genetics

Indirect AI discrimination

Indirect discrimination, on the other hand, is much more common and much harder to prevent, because it occurs as a byproduct of non-sensitive attributes that happen to strongly correlate with those sensitive attributes. This type of AI discrimination happens to even the most well-intentioned recruiters.

An example here would be if an AI determined that taller applicants were more likely to perform better in a role than shorter applicants. Pretty weird but not overtly malicious, right? Except because women tend to be shorter than men on average, this decision would negatively affect female applicants.

This is a tricky area to navigate, and it can stump even the most experienced data scientists. Worst of all, you can't really detect indirect AI discrimination without doing a lot of testing. You have to change the weight of different factors to see if the difference in outcomes for one group compared to the rest exceeds an agreed-upon threshold.

All that to say: AI discrimination is hard to mitigate, but it's not impossible. Let's take a look at four things you can do to give the AI you invest in the best shot at producing impactful, non-discriminatory results.

4 things you can do today to mitigate AI discrimination later

You may not have purchased your AI-enabled recruiting software yet, but when you do, you want to be prepared. Giving the AI bad data, or misunderstanding how it works, will all but guarantee disastrous results.

Here are four things you should do to get ready:

1. Collect and tag as much historical recruiting data as you can

Every small business that adopts AI will encounter a harsh truth: Sample size matters.

If your AI only has data from five previous hires for a role to work with, the likelihood of biases and red herrings in your results is high. On the other hand, if your AI has data from 5,000 previous hires to work with, you're much more likely to find meaningful trends.

Large businesses who do a ton of hiring are at an obvious advantage here, but if you're a smaller organization, just be patient. And if you haven't adopted an applicant tracking system to help securely house this data and ensure its consistency, do so now.

2. Address factors that are biasing your data

Biased data begets biased AI recommendations. Is your recruiting data really as neutral as you think it is?

Take your job descriptions as an example. Research has shown that certain words and phrases can influence who applies to your job postings. Men tend to gravitate toward words like “rockstar" and “superior," while older workers will give pause if a job description includes a phrase like “up-and-coming."

Consider investing in a job description tool, or a recruiting system with a job description analysis feature set, to detect any of these biased wording choices that could be influencing who applies to your company.

3. Look into free and open source tools that evaluate algorithm fairness

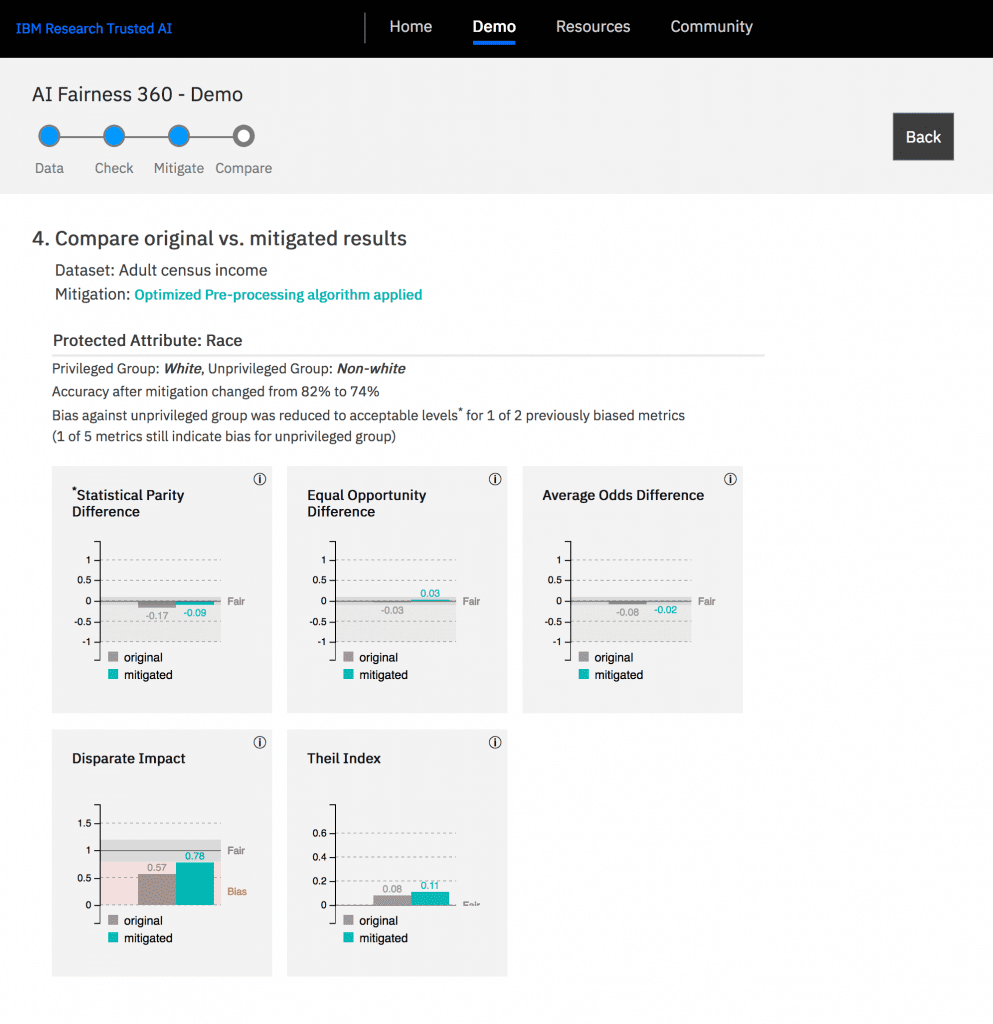

Remember earlier when I said you had to determine how the weighting of different factors in your algorithm affects the outcomes of specific groups to test for indirect discrimination? Then remember when you sighed heavily because you have no idea how to do that?

Luckily, there are free and open source tools out there to help even data laypeople detect biases in their AI algorithms. Find a tool you like, complete some tutorials, and start to get familiar with how you can test for AI discrimination—especially if you plan to build an algorithm in-house.

An example of an AI fairness test done in IBM's AI Fairness 360. (Source)

Gartner Digital Markets Analyst Lauren Maffeo has additional advice on this topic.

4. Ask AI vendors to open the 'black box'

The "black box" problem in machine learning and AI refers to the fact that, because of a lack of transparency, it can be incredibly difficult to figure out how AI made a decision or came to a certain conclusion.

That problem is exacerbated when you're essentially buying an AI tool off the shelf, because vendors are reluctant to spill the beans on the “secret sauce" in their system.

All that to say, the more information you can glean on how a vendor's AI tool actually works, the better prepared you'll be to address problems or make necessary tweaks down the line.

Don't rely on AI alone: Become a recruiting centaur

As much as we fear the robot takeover, there's always that sliver of hope that one day AI and machine learning will solve all our recruiting woes and make the right hiring decision for us every time. Unfortunately, Dr. Stylianos Kampakis—data scientist and author of “The Decision Maker's Handbook to Data Science"—says that's unlikely to happen anytime soon.

"When recruiting there are a myriad of factors involved in making the best hire, and quite often these factors might not be reflected on someone's [resume]," Kampakis says. “Assuming that it will be easy to make an algorithm to make the perfect hire is wishful thinking."

That's why recruiting has to be what Gartner analyst Darin Stewart calls a “centaur"—a human/AI hybrid where each half plays an important role in the hiring decision process (full research available to Gartner clients). The AI may make a recommendation, but a human should still make the final call. That's how you avoid AI discrimination.